Cracking Tic-Tac-Toe!

Use the board below to play against a simple Reinforcement Learning agent that uses Exhaustive Search to find the best possible action! You can try as many times as you'd like, but don't expect to win 😉

Next player: X

How does it work?

Very briefly, this game was solved using an Exhaustive Search algorithm where an agent analysed each possible state of the game to find the best possible action to maximize its reward.

That summary probably makes a lot of sense if you're comfortable with Reinforcement Learning's concepts already, but if not, good, because I'll dive deeper to try to explain all that jargon 😉.

As illustrated by the image above, an agent is an actor, one player in the game that can take actions. The game of Tic Tac Toe has two players, thus two agents will take turns to complete the game. An agent can be a human, maybe playing against another human, but in this context, an agent is a bot. Notice that the concept of "intelligence" does not enter the definition of an agent. It is entirely ok for an agent to play completely randomly.

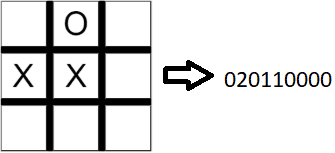

At the start of a game of Tic Tac Toe, the board is empty, but the agent can only know this once another subject reads the board and translates it into a state, this is, a simplified representation of the information available in the environment. Think about your interpreter as your eyes, which communicates the board into electric signals your brain can process. In this case, the interpreter transforms the actual board into a string of 9 numbers that is communicated to the agent.

The next piece of information an agent needs to evaluate the result of their decisions is a reward, but this only comes after the agent takes an action, thus when the game starts there are no rewards.

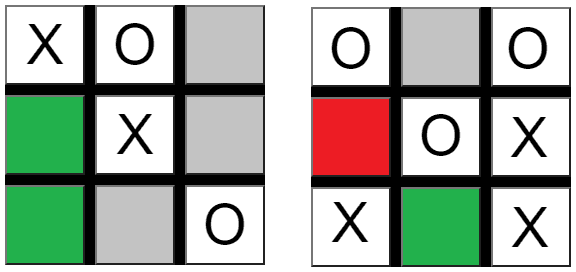

During training, the agent plays against itself in a modified version of the game where it is allowed to roll back one decision and take another. This way, the agent can evaluate the result of every possible move, as illustrated by the figure below.

This strategy, called Exhaustive Search, is the simplest way to find the best strategy in a deterministic environment (where there is no randomness). Tic Tac Toe is a simple enough game where this strategy can be successful. In principle, one would think there are possible boards, but because the game finished as soon as one player has marks in line, there are only possible boards.

In more complex games, such as Chess, this number is absurdly large, therefore this strategy wouldn't be tractable.

Once the agent has analysed each of the possible boards and their outcomes, it assigns one of three values to each valid move: if the move will make the agent lose, if it will make it tie and if it will force a win.

During game time, the engine will simply look up these pre-computed values and choose the best possible action if there is one available